尚硅谷大数据技术之Hadoop(MapReduce)(新)第3章 MapReduce框架原理

2.需求分析

MapJoin适用于关联表中有小表的情形。

3.实现代码

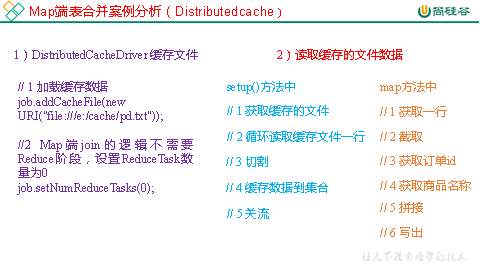

(1)先在驱动模块中添加缓存文件

|

package test; import java.net.URI; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class DistributedCacheDriver {

public static void main(String[] args) throws Exception { // 0 根据自己电脑路径重新配置 args = new String[]{"e:/input/inputtable2", "e:/output1"};

// 1 获取job信息 Configuration configuration = new Configuration(); Job job = Job.getInstance(configuration);

// 2 设置加载jar包路径 job.setJarByClass(DistributedCacheDriver.class);

// 3 关联map job.setMapperClass(DistributedCacheMapper.class); // 4 设置最终输出数据类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(NullWritable.class);

// 5 设置输入输出路径 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 6 加载缓存数据 job.addCacheFile(new URI("file:///e:/input/inputcache/pd.txt")); // 7 Map端Join的逻辑不需要Reduce阶段,设置reduceTask数量为0 job.setNumReduceTasks(0);

// 8 提交 boolean result = job.waitForCompletion(true); System.exit(result ? 0 : 1); } } |

(2)读取缓存的文件数据

|

package test; import java.io.BufferedReader; import java.io.FileInputStream; import java.io.IOException; import java.io.InputStreamReader; import java.util.HashMap; import java.util.Map; import org.apache.commons.lang.StringUtils; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper;

public class DistributedCacheMapper extends Mapper<LongWritable, Text, Text, NullWritable>{

Map<String, String> pdMap = new HashMap<>(); @Override protected void setup(Mapper<LongWritable, Text, Text, NullWritable>.Context context) throws IOException, InterruptedException {

// 1 获取缓存的文件 URI[] cacheFiles = context.getCacheFiles(); String path = cacheFiles[0].getPath().toString(); BufferedReader reader = new BufferedReader(new InputStreamReader(new FileInputStream(path), "UTF-8")); String line; while(StringUtils.isNotEmpty(line = reader.readLine())){

// 2 切割 String[] fields = line.split("\t"); // 3 缓存数据到集合 pdMap.put(fields[0], fields[1]); } // 4 关流 reader.close(); } Text k = new Text(); @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 获取一行 String line = value.toString(); // 2 截取 String[] fields = line.split("\t"); // 3 获取产品id String pId = fields[1]; // 4 获取商品名称 String pdName = pdMap.get(pId); // 5 拼接 k.set(line + "\t"+ pdName); // 6 写出 context.write(k, NullWritable.get()); } } |